Stop Overbuilding Your Data Stack

Vol. 14: Forget “enterprise” overkill — this 5-tool setup gets you clarity fast, without the drama.

Welcome back to The Datapreneur — the newsletter where we stop overbuilding, start focusing, and turn data into decisions that actually move the business forward.

This week’s issue is for anyone who’s ever sat in a meeting where the stack looked impressive, the invoices were massive… but the team still couldn’t answer a simple question like

“Are we on track?”

Because here’s the truth: most businesses don’t need fifteen tools and a seven-figure contract. They need a setup that’s light, fast, and “good enough” for 80% of the decisions. In 2025, clarity beats complexity — and I’ll show you the exact five tools I’d use to get there.

TL;DR

Most businesses don’t need a heavyweight enterprise stack.

They need something quick to set up, simple to maintain, and strong enough to answer 80% of their weekly business questions.

My 2025 recipe:

→ BigQuery as the warehouse,

→ Fivetran or Airbyte for ingestion,

→ Dataform for transformations,

→ Looker Studio for BI,

→ and Airflow only when orchestration truly matters.

Clarity comes first; everything else can wait.

The question that never goes away

If I had a dollar for every time someone asked me what stack I’d use if I had to build from scratch today, I’d probably have enough to cover a year of Snowflake.

The funny part is, most people don’t need anything close to Snowflake.

What most companies need is not an “enterprise” stack with fifteen tools and seven-figure contracts.

They need a lean, durable setup that can stand up in a week, cost little to run, and provide trustworthy numbers for the handful of decisions that matter.

As Slawomir put it in one of our conversations recently,

“It’s soo easy to have an overkill solution with multiple tools.

More than 90% of businesses have data of the size that would fit a single instance.

You don’t need to spend millions or overcomplicate.”

So let’s talk about the five tools I’d actually use to launch a modern stack in 2025.

The 5 tools

1. Warehouse

At the core, I’d anchor everything in Google BigQuery. It’s serverless, elastic, and SQL-first, which means no infra babysitting and no upfront sizing exercises.

You pay only for what you query and store, and if you need cheap object storage for logs or raw files, Cloud Storage integrates seamlessly.

One analytics project, a dataset per domain, a raw layer and a modeled layer — that’s all it takes to stay clean.

2. Ingestion

For ingestion, I’d pick Fivetran or Airbyte. Both save you from the time sink of writing connectors.

Fivetran is fast, low-maintenance, and great to start with, though it can get expensive at scale.

Airbyte, whether open source or Cloud, gives more flexibility and cost control if you want to self-host.

Either way, the goal is to stop wasting months on plumbing.

3. Transformations

Dataform is the path of least resistance if you’re on GCP.

It handles versioned SQL models, dependencies, tests, and scheduling without friction.

If you’re already in another ecosystem, dbt Core or SQLMesh are strong contenders.

But I stay away from dbt Cloud in the early stage — the costs and lock-in outweigh the benefits.

My principle here is simple: keep transformations close to the warehouse, and keep them in SQL until you truly need Python.

4. BI

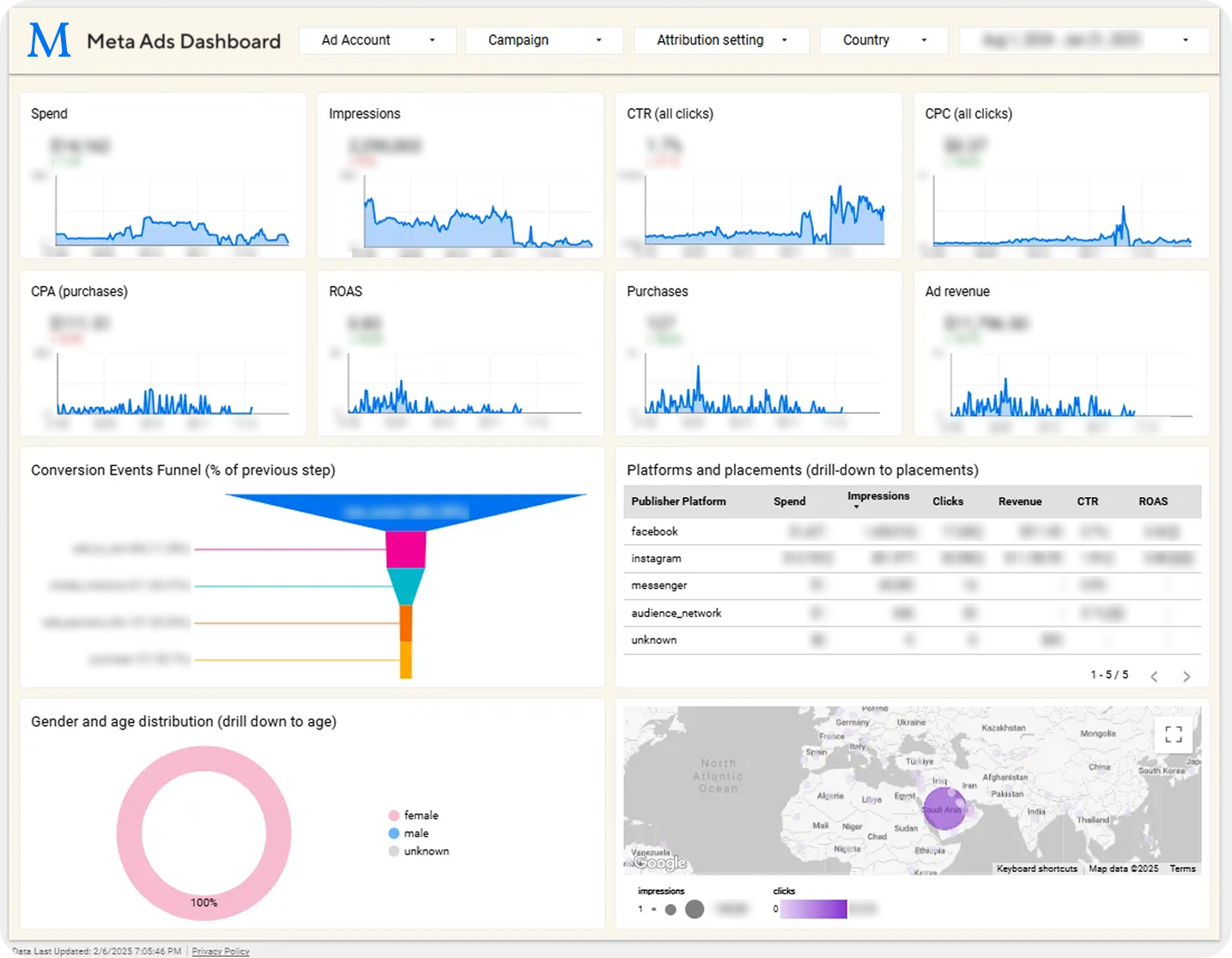

I’d start with Looker Studio.

It’s free, quick to set up, and “good enough” for 80% of stakeholder views — exec summaries, weekly performance reports, or quick channel explorations.

Take one of our projects as an example — the visuals might not be as dazzling as what you’d get in Tableau, but this dashboard nails what really matters: it screams insight and sparks action:

Tableau and Power BI are great tools, but I’ve retired the reflex to start there.

Most SMBs won’t outgrow Looker Studio until much later.

5. Orchestration

Finally. And here’s where discipline matters: don’t reach for Airflow until you actually need orchestration.

In the beginning, Dataform’s built-in scheduling is plenty.

Only when you have multiple pipelines, dependencies, and SLAs does Airflow (via Cloud Composer or a lean self-hosted setup) become worth the overhead.

How I’d stand this up in Week 1

If I had to build this stack from zero in just a week, here’s how it would look:

Days 1–2 — Land the data

→ I’d spin up BigQuery and Cloud Storage

→ Then connect a handful of critical sources — ads, CRM, product events — through Fivetran or Airbyte.

All data would land raw, untransformed.

Day 3–4 — Model the basics

These would be about pulling out Dataform for staging

models to clean and align columns,

marts to calculate the first round of KPIs (like CAC, ROAS, and conversion by channel).

I’d add a few lightweight tests so I could actually trust what I was seeing.

Day 5 — Ship the view

I’d build a single Looker Studio overview with the KPIs and annotate it with:

metric definitions,

refresh times,

and ownership.

That’s it.

By the end of the week, the company would have one shared source of truth answering the most important question: “Are we on track?”

Everything else — complex dashboards, heavy orchestration, advanced data science — can wait.

What I deliberately avoid

This is just as important as what I include.

1. I don’t custom-code connectors.

2. I don’t over-engineer orchestration.

3. I don’t buy heavy BI licenses before they’re needed.

4. And I definitely don’t design “future-proof” models for imaginary use cases.

Clarity beats completeness every single time.

When and how to upgrade

Of course, growth brings new needs. When volumes or latency demands explode, you might consider Snowflake or Spark for high-load use cases.

If you need a semantic layer for governance and complex metrics, Looker (the product) or a headless solution can step in.

If your data science maturity grows, Python pipelines and feature stores become relevant.

But the rule is always the same: unless the new tool ties directly to a KPI and a real decision, it doesn’t make the cut.

Bottom line

In 2025, the winner isn’t the team with the flashiest stack.

It’s the one that gets to clarity fastest. The 5 tools above give you that speed without bloat — and they leave plenty of room to grow when the business truly demands it.

Remember: a modern stack without defined KPIs is just a prettier spreadsheet.

Before building anything, ask yourself: Which three to five decisions must this data support every week?

Answer that, and the stack almost designs itself.

Free resource to make this easy

If you want a guided way to pick KPIs, map sources, and plan your first dashboards, this is your first-aid kit:

28‑Day Roadmap to Marketing Analytics

Define goals → map sources → choose KPIs → design the first dashboard → plan automation.

Weekly Roundup

Every week I highlight posts that hit the same nerve as this issue — reminding us that shiny stacks and AI buzzwords mean nothing without foundations. This week’s theme: simplicity, fundamentals, and the unglamorous work that makes the glamorous possible.

1. “Your AI will fail because the foundations aren’t there”

[Read the post →]

Dylan lays out a painful but necessary checklist: direction, governance, integration, maturity. The punchline is simple — AI doesn’t magically solve bad data habits, it just automates them. Without clear definitions and processes, AI turns into “prettier mistakes.”

2. “Resisting learning SQL is like resisting learning handwriting”

[Read the post →]

I love this analogy. SQL is still the lingua franca of analytics. You don’t need to be a DBA wizard — but joins, aggregates, case statements? That’s table stakes. Avoiding SQL today is like refusing to write because we have keyboards — you’re only slowing yourself down.

3. “Data Governance pros are laying the groundwork quietly”

[Read the post →]

Patrick tells a story from a conference: every hand goes up for data science, almost none for governance. But here’s the truth — data science only works when the foundation is solid. Governance is the unsexy backbone that ensures the future doesn’t collapse under its own weight. Models get the applause, governance keeps the lights on.

Together, these three posts reinforce a single idea: the boring stuff is the important stuff. Whether you’re building a stack, enabling AI, or running advanced analysis — clarity, simplicity, and trust come first.

That’s it for this week

If you’re standing up a new stack, remember:

Keep it simple, keep it fast, and tie everything to decisions.

Numbers get noticed. Stacks that drive clarity get used.

Until next time —

Nick from Valiotti Analytics